Claude’s AI Artefacts Are a Game Changer for Education!

The latest Claude update makes it easier than ever to build smart AI educational apps.

I've been meaning to start blogging again for a while now, but returning to my old Medium just didn’t feel right. Between projects and life, most of my thoughts have been scattered across random posts on X. But something shifted this week.

The latest update to Claude Artefacts dropped and suddenly, I’m buzzing with ideas. This felt like the perfect moment to finally launch a Substack and explore what I see as a major leap forward: the new, AI-integrated incarnation of Claude Artefacts and what it means for building smart educational apps.

So whats actually new?

Claude Artefacts have been around for about a year. The core idea is simple but powerful: you describe an app, often a client-side, interactive web app, and Claude generates the code, gives you a live preview, and lets you test and publish it. Tools like ChatGPT’s code interpreter and Gemini’s canvas offer similar experiences. They make it incredibly easy to prototype and iterate on ideas: describe, generate, test, refine, publish.

So why is this latest Claude update such a big deal?

Previously, these apps were still conventional in one key sense - they used deterministic, hardcoded logic. Great for tools and visualisations, but not truly smart. The recent update changes that. You can now describe where your app should call the Claude API, meaning you can integrate live LLM responses directly into your app’s functionality.

Combine that with Claude’s improved ability to one-shot a complete, working app scaffold - UI and backend glue included - and you’ve suddenly got a way to build fully functioning AI-powered apps from a single prompt. That’s a game changer.

Meta Prompting Behind the Scenes!

When building with the new Claude Artefacts, you’re not writing full codebases or the actual prompts that your app needs tomake - you’re just describing what your app should do, and when it should make API calls to Claude. That’s it. Claude takes care of the rest.

The real magic happens behind the scenes: Claude not only generates the app UI and logic, it also writes the prompts that your app will use when calling the Claude API. It structures the JSON payloads, handles the inputs and outputs, and wires everything up automatically. In other words, it’s an AI writing prompts for itself, and embedding those prompts directly into your app’s runtime.

It’s meta. It’s elegant. And it feels like a preview of where app development is headed - describing intent and letting the AI scaffold everything around it, including how it talks to itself.

Implications for Educators

This shift has big implications for how we think about educational technology.

We’re now seeing the lines blur between traditional chat-based AI interfaces and generative UIs that include embedded AI functionality. With Claude Artefacts, you’re not just building a chatbot or a static app. You’re designing learning tools that can think, respond, and adapt on the fly.

Imagine typing (or speaking) into existence an equation tutor with a dynamic equation solving tutor that has intelligent student support! Or generating dynamic multi-perspective elaboration UI’s for any topic with dynamic feedback pulling in diverse perspectives not thought of by the student.

It’s a profound shift. We’re moving from designing static digital tools to orchestrating interactive, intelligent experiences with language as the interface.

Show me Examples

To put all this to the test, I set myself a challenge: build six example apps in a single day to showcase what’s now possible. Each app explores a different use case or capability. In most cases, Claude was able to one-shot a working app based purely on my initial description. At most, I gave minimal feedback to refine functionality or layout. The results weren’t just impressive - they were a glimpse into a new development paradigm: describe a smart app, generate it, test it, refine and publish.

I'll walk through each example below and share what surprised me, what worked instantly, and where the edges still needed testing and feedback.

Six Thinking Hats

A learner can select a Hat or get a random allocation. Hat text is dynamically generated by persona’s the LLM makes dynamically based on the topic. The user gets to enter their text and copy the generated Markdown.

Try: Six Thinking Hats

Multi-Perspective Elaboration

Explores dynamic UI generation, with the learner first entering their ideas for each perspective. AI then jumps in to add perspectives not mentioned. Again a Markdown version can be copied.

Try: Multi-Perspective Elaboration

Business Model Canvas

The user enters a business title and description, a business model canvas it automatically filled in by AI. The user can edit and get AI feedback.

Try: Business Model Canvas Helper

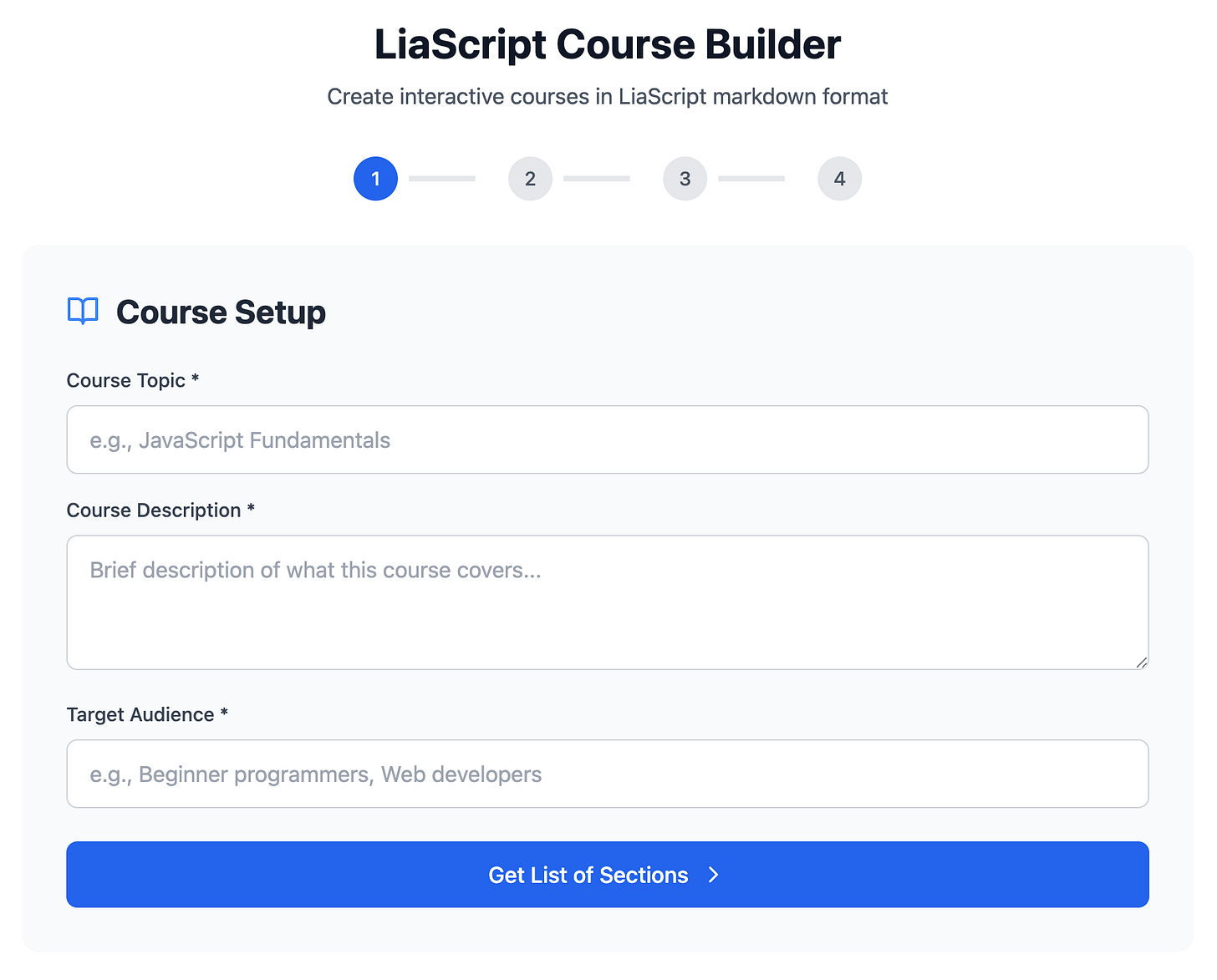

Generating Liascript Courses

Liascript is a Markdown course authoring format. I’ve previously made a Collab notebook that used LangChain to make calls and build a full course. This version allows the user to enter the course topic, description, target audience then allows the user to edit the generates the section titles and finally makes LLM calls in a loop for each topic. Once all the sections are collated the final Liascript Markdown can be pasted into the LiaScript Live Editor.

Try: Liascript Course Builder

Solving Equations Tutor

This is something I’ve always wanted to make. Till now never had the time! It’s an intelligent Equation Solving Tutor with a modern UI that allows the learner to solve the equation in steps and move components to either the left of right side of the equation and flip signs. AI is used to write the step annotations. The learner can chat with an AI tutor at any time. Initially I wanted the equation components to be draggable but this was quite buggy. Claude came up with the idea to to have the buttons to move and flip the sign!

Try: Solving Equations Tutor

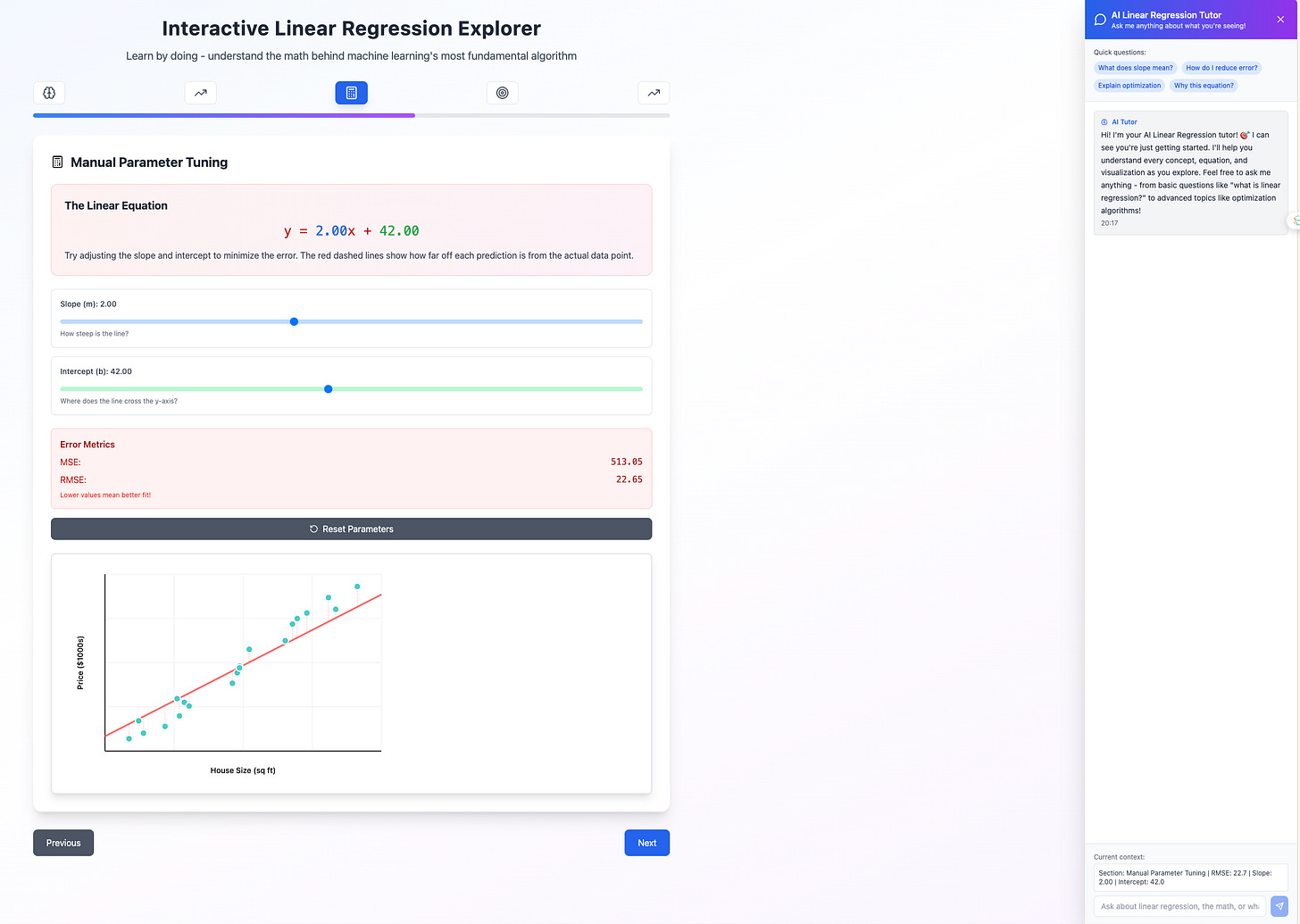

Linear Regression Explainer with AI Tutor

Finally an interactive explainer that lets a learner discover linear regression by trying to adjust parameters and minimize the errors. There is an chatbot tutor powered by and LLM as a popout side panel.

Try: Linear Regression Linear Regression Explorer

What’s Still Missing?

As impressive as the new Claude Artefacts are, there’s still room to take things to the next level. Here are a few current limitations I ran into, along with some hopeful suggestions for Anthropic:

Limited Download Support:

Most of the apps I built only offer a “Copy Markdown” option. That’s because I couldn’t get file downloads to work within the sandboxed environment. For apps that generate code or learning content, this is a bit of a blocker.No Parallel API Calls:

While recursive API calls are possible (and I used them in apps like the Six Thinking Hats simulator and a Liascript course generator), I couldn’t get Claude to implement parallel execution usingPromise.all()or similar constructs. Adding support for concurrent operations would greatly improve performance in more complex apps.No External Deployment (Yet):

You can export the TypeScript code, but it references acallClaude()function that isn’t defined in the bundle. Without that, you can’t easily redeploy the app elsewhere. Sure, it could be reverse-engineered, but a full-code export option—including the Claude API integration—would be a huge step forward.No Built-in Datastore:

One major wish: a simple per-user datastore. Something lightweight, just enough to persist data between sessions or personalize the experience. This would open up even richer use cases for learning apps, like tracking progress or storing reflective responses.

Despite these gaps, what is already possible feels like a glimpse of the near future. These missing pieces, if addressed, could make Claude Artefacts an essential tool in the educational app builder’s toolkit.

Imagine the Future

Now take everything I’ve described and imagine it integrated directly into a Learning Management System!

Picture an educator, without coding expertise, simply describing a smart learning tool: a formative feedback generator, a debate coach, a dynamic quiz engine, or a concept visualizer. Within moments, they’re testing it, refining it, and deploying it to students. And not just as isolated tools, but connected to the LMS, able to track student interactions, adapt to progress, and feed insights back to teachers in real time.

No dev cycles. No procurement delays. Just idea → prototype → refine → deploy.

This isn’t some distant sci-fi scenario. The core components already exist. With Claude Artefacts, we’re edging toward this future. What’s missing is the integration layer, and the will to build platforms that treat educators as designers, not just content uploaders.

The future should be now.

Acknowledgements

Many of the app ideas I’ve explored here have their roots in earlier projects - developed in close collaboration with learning designers and educators (many of whom have since taken on new and impressive roles). Their creativity, insight, and commitment to improving student learning have shaped my thinking and continue to inspire what I build.

A huge shoutout to Professor Greg Winslett, Professor Shane Dawson, Liz Heathcote, Carrie Finn, Wendy Chalmers, Linda MacDonald, Anna Morris, and Dr Neville Smith. Thank you for the shared experiments, big ideas, and late-night whiteboard sessions.

Some of these concepts trace back to one of my earliest ventures into educational AI: the QUT homegrown LLM called OLT, which we documented here back in the day → OLT: Learning Design Templates.

It’s amazing to see how far we’ve come—and even more exciting to imagine what comes next.

Finally - ChatGPT 4o was used to help write this post! (Yes I know not Claude, but I’m a multi model person.

Wow, this was so "learningful" Aneesha 🤓 I will share with my team.

This incredibly insightful Aneesha. I am so excited to see where this leads!!